Contextual Fit of Evidence Based Interventions for Children and Families in Scotland

Helping children and young people achieve positive mental health and wellbeing is a priority for Scotland. Positive mental health and wellbeing outcomes for children start with strong, nurturing and supportive relationships between children and those who are most significant to them both at home and in their wider world. When additional support for mental health and wellbeing are needed at an early stage, then evidence-based interventions can play a significant role in enhancing the relationships and skills that build resilience within the child and family.

Early help is more likely to be effective when there is a holistic understanding of children’s mental health and wellbeing, as there is often an interaction between multiple factors affecting mental health. Specific evidence-based psychological interventions may be one strand among a range of practical and emotional help to support the wellbeing of children and families.

Intervention selection is the systematic process of choosing an evidence-based or evidence-informed intervention or practice to improve outcomes. Using interventions with a base of evidence is important—but simply choosing to use an intervention with a strong evidence base is not enough to ensure a goodness of fit with population needs, quality of implementation, intervention impact, or sustainable implementation.

An intervention may have evidence to prove it works, but to be successful and have its intended impact the implementing service needs to know whether they have the resources and capacity to deliver it and to sustain the delivery. As well as to know whether the intervention meets the need of the population it serves and aligns with local values and priorities, as well as with other services and interventions already running locally, the intervention needs to fit with the local implementation context. An intervention will only be effective if those who are trained and expected to deliver the service are themselves supported and supervised in practice that is both reflective and evaluated. Sustaining such programmes requires strategic leadership.

The Early Intervention (EI) Framework for Children and Young People’s Mental Health and Wellbeing is a database of interventions which is designed to help guide decision making around which interventions or practices should be implemented or continue to be implemented. By using this tool to guide decision making, it is hoped that it will increase the likelihood that service providers select interventions appropriate for their setting and target population, thereby increasing the likelihood of positive outcomes for children and families. Additionally, service providers might want to explore or reassess the implementation context when an intervention or approach is not achieving its intended results and the EI Framework can support this assessment.

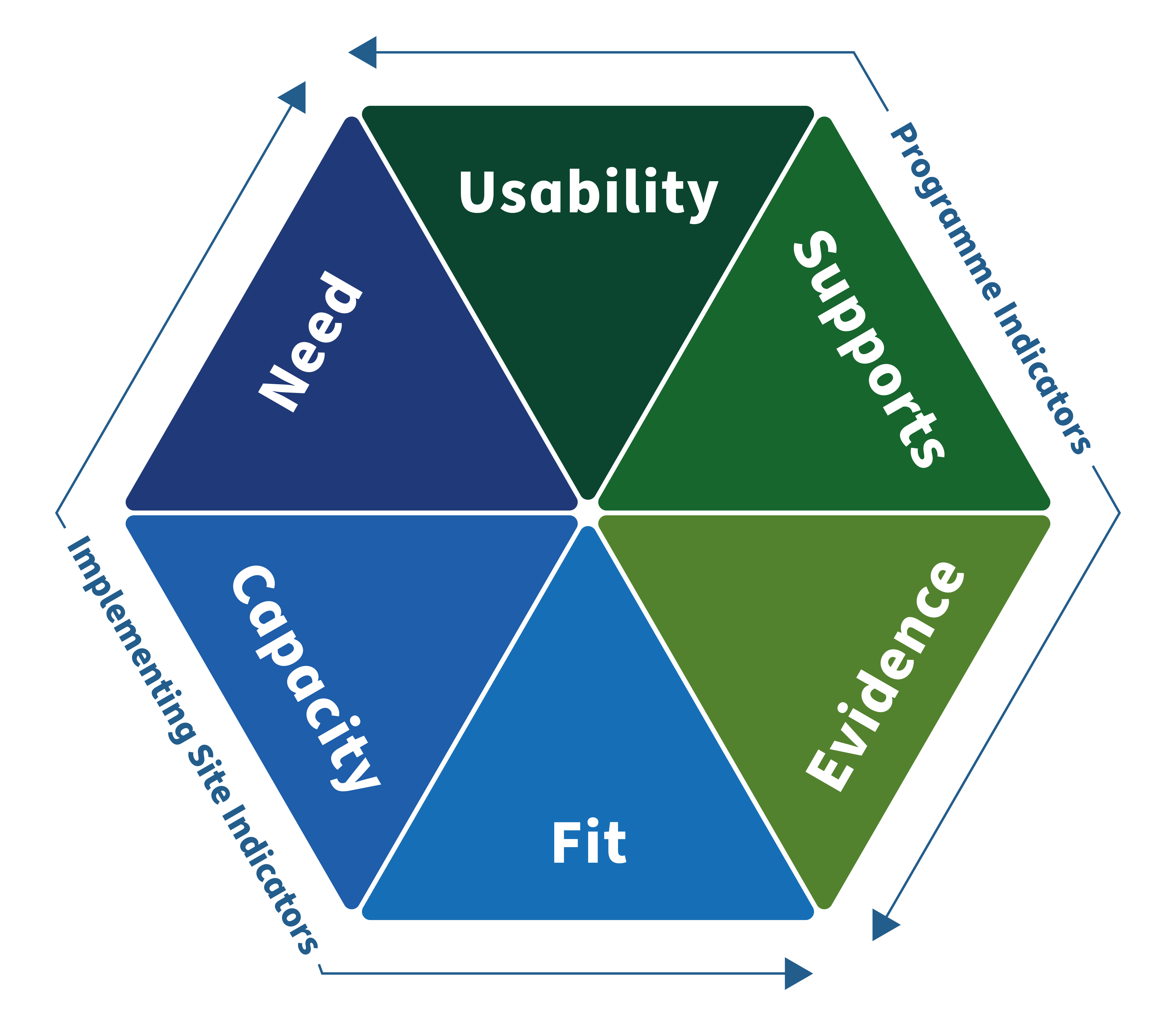

The information provided about each programme in the EI Framework is based around the Hexagon Tool developed by the National Implementation Research Network (NIRN) at the University of North Carolina, Chapel Hill.

The Hexagon Tool

The EI Framework uses the Hexagon Model to consider six broad factors. Information is given about each factor which helps guide decision making around implementation. The six factors are divided into two categories:

Programme Indicators

Programme Indicators are used to assess new or existing interventions or approaches that will be implemented along the following domains:

- Usability

- Supports

- Evidence

These indicators specify the extent to which the identified intervention or approach demonstrates evidence of effectiveness, available sources of support for implementation and usability across a range of contexts.

Development of the Early Intervention Framework

Information has been gathered about each intervention from a range of sources. Each intervention summary will include information from research findings, administrative data, interviews and document reviews. Based on the information gathered and the Hexagon rating criteria, a score will be assigned to the three Programme Indicators. The sections for Implementing Site Indicators have been populated with information and prompt questions, so the implementing site can allocate a score to each indicator based on their specific context and the Hexagon Tool rating scale.

Implementing Site Indicators

Implementing Site Indicators are used to assess the extent to which a new or existing intervention or approach matches the place where it is being implemented (the implementing site) along the following domains:

- Fit

- Capacity

- Population need

The assessment specifies suggested conditions and requirements for a strong match to fit, capacity and need for the identified intervention or approach.

Programme Indicators (usability, supports, evidence)

The EIF provides ratings for the three programme indicators:

- Usability – level of operationalisation of an intervention or approach including the extent to which core components have been identified and defined, the availability of a fidelity assessment, and guidance on acceptable modifications to enhance contextual fit.

- Supports – documented level of available resources to support intervention implementation including resources to build staff competency and create organisational and systems changes to sustain service delivery

- Evidence – documented level of effectiveness for interventions and approaches based on study design, number of studies, and population outcomes

Implementing Site Indicators (fit, capacity, population need)

The EI Framework provides information for service providers to consider for the three Implementing Site Indicators. Service providers can rate the fit of the potential intervention or practice with their unique context for the following:

- Fit – whether the intervention or approach fits with the priorities of the implementing site; community values; and other existing services and initiatives

- Capacity – the extent to which the implementing site has the capacity necessary, including qualified staff, financial supports, technology supports, and administrative supports required to implement and sustain the intervention with integrity

- Population Need– whether the implementing site has sufficiently documented the need of the population they serve and the intervention or approach under consideration demonstrates meeting the needs of the population through rigorous research with a comparable population

It is recommended that a stakeholder group is involved in rating the three Implementing Site Indicators, and in making decisions about implementation of an intervention. Using the Hexagon Tool should be a collaborative process involving individuals with diverse perspectives (e.g. families, practitioners, leaders, community partners). A diverse team is an effective structure for completing the assessment, allowing for a broad range of viewpoints and additional engagement from other stakeholder groups, when other viewpoints are necessary. This process allows for a well-informed decision to be made about the use and/or adaptation of an intervention or approach. As part of the decision-making process, teams should seek a shared understanding of the issues so that consensus can be reached when scoring each of the domains.

Intervention Review Process

To produce and rate the collection of interventions contained in the EI Framework, a multi-step method was followed:

- Intervention summaries were completed based on available research findings in peer-reviewed publications and grey literature and interviews with intervention developers.

- Summaries were developed which include descriptions of both programme indicators and implementing site indicators.

- Programme developers reviewed the intervention summaries and offered comment.

- Reviewers scored the three programme indicators based on the intervention summaries.

- All programme indicators were scored by two independent coders.

- Differences in scores were reconciled by a review team.

- Practitioner perspectives on scores were gained through surveys for a sample of interventions.

- Scores and descriptions were revised where required based on practitioners’ input.